AI tools for Meta Llama WhatsApp demo application

Related Tools:

Meta Llama

Meta Llama is an AI-powered chatbot that helps you write better. It can help you with a variety of writing tasks, including generating text, translating languages, and writing different kinds of creative content.

Reflection 70B

Reflection 70B is a next-gen open-source LLM powered by Llama 70B, offering groundbreaking self-correction capabilities that outsmart GPT-4. It provides advanced AI-powered conversations, assists with various tasks, and excels in accuracy and reliability. Users can engage in human-like conversations, receive assistance in research, coding, creative writing, and problem-solving, all while benefiting from its innovative self-correction mechanism. Reflection 70B sets new standards in AI performance and is designed to enhance productivity and decision-making across multiple domains.

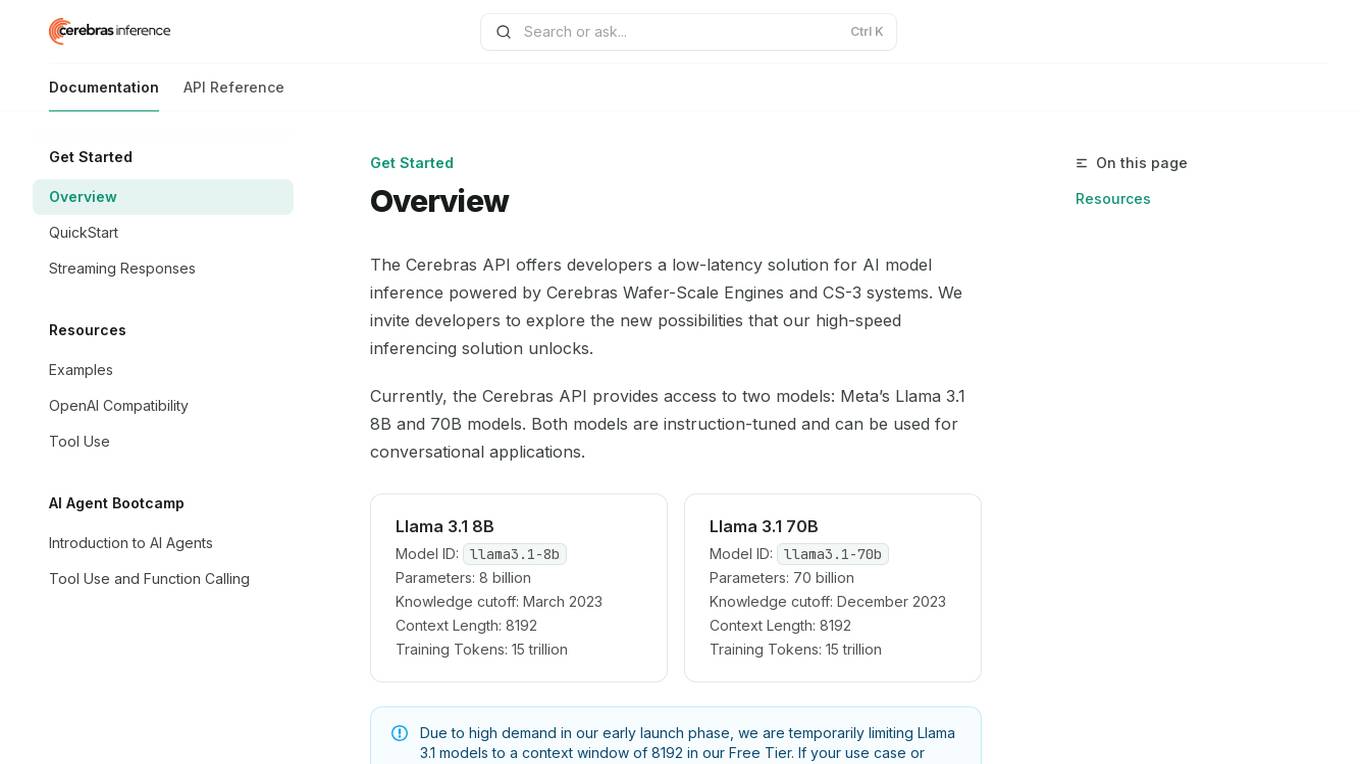

Cerebras API

The Cerebras API is a high-speed inferencing solution for AI model inference powered by Cerebras Wafer-Scale Engines and CS-3 systems. It offers developers access to two models: Meta’s Llama 3.1 8B and 70B models, which are instruction-tuned and suitable for conversational applications. The API provides low-latency solutions and invites developers to explore new possibilities in AI development.

Privatemode AI

Privatemode is an AI service that offers always encrypted generative AI capabilities, ensuring data privacy and security. It allows users to utilize open-source AI models while keeping their data protected through confidential computing. The service is designed for individuals and developers, providing a secure AI assistant for various tasks like content generation and document analysis.

Monitr

Monitr is a data visualization and analytics platform that allows users to query, visualize, and share data in one place. It helps in tracking key metrics, making data-driven decisions, and breaking down data silos to provide a unified view of data from various sources. Users can create charts and dashboards, connect to different data sources like Postgresql and MySQL, and collaborate with teammates on SQL queries. Monitr's AI features are powered by Meta AI's Llama 3 LLM, enabling the development of powerful and flexible analytics tools for maximizing data utilization.

Meta AI

Meta AI is an intelligent assistant that offers a range of AI experiences for users, including answering questions, providing advice, creating images, and more. Users can also create their own AI characters or explore AIs made by others through AI Studio. The platform aims to empower users to connect with what matters to them and discover new possibilities through AI technology.

Chat With Llama

Chat with Llama is a free website that allows users to interact with Meta's Llama3, a state-of-the-art AI chat model comparable to ChatGPT. Users can ask unlimited questions and receive prompt responses. Llama3 is open-source and commercially available, enabling developers to customize and profit from AI chatbots. It is trained on 70 billion parameters and generates outputs matching the quality of ChatGPT-4.

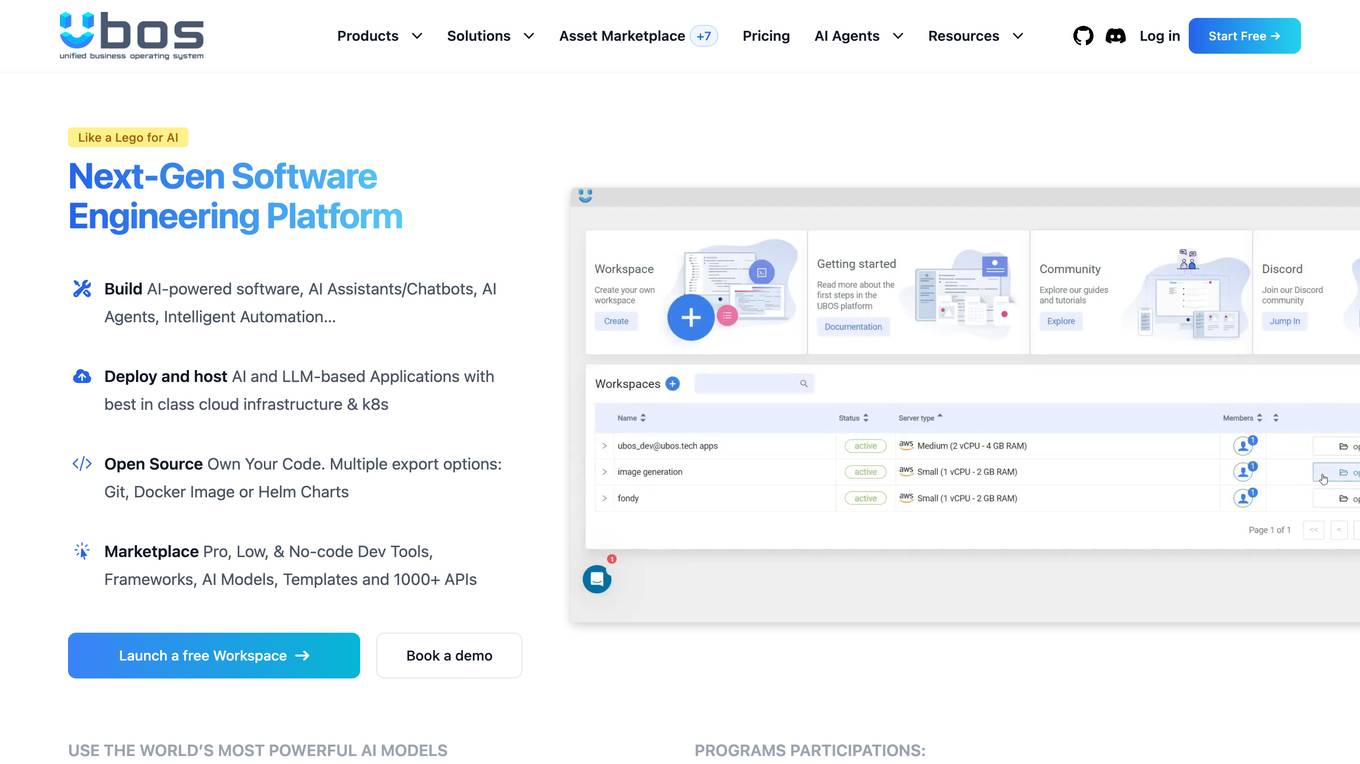

UBOS

UBOS is an engineering platform for Software 3.0 and AI Agents, offering a comprehensive suite of tools for building enterprise-ready internal development platforms, web applications, and intelligent workflows. It enables users to connect to over 1000 APIs, automate workflows with AI, and access a marketplace with templates and AI models. UBOS empowers startups, small and medium businesses, and large enterprises to drive growth, efficiency, and innovation through advanced ML orchestration and Generative AI custom integration. The platform provides a user-friendly interface for creating AI-native applications, leveraging Generative AI, Node-Red SCADA, Edge AI, and IoT technologies. With a focus on open-source development, UBOS offers full code ownership, flexible exports, and seamless integration with leading LLMs like ChatGPT and Llama 2 from Meta.

Meta AI

Meta AI is an advanced artificial intelligence tool that enables users to learn, create, and explore the world around them. With features like AI Studio for creating custom AIs and Llama for building the future of AI, Meta AI offers cutting-edge technology to bring visions to life. Users can engage with AI characters, identify objects, and have conversations using voice commands. The platform is designed to make AI more accessible and engaging for everyone, with a focus on open collaboration and innovation.

ColossalChat

ColossalChat is a chatbot powered by LLaMA, a large language model from Meta AI. It can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a comprehensive and informative way. It is designed to be safe and inoffensive, but it may occasionally make mistakes. Please report any issues you encounter so that we can improve the chatbot.

Fluid

Fluid is a private AI assistant designed for Mac users, specifically those with Apple Silicon and macOS 14 or later. It offers offline capabilities and is powered by the advanced Llama 3 AI by Meta. Fluid ensures unparalleled privacy by keeping all chats and data on the user's Mac, without the need to send sensitive information to third parties. The application features voice control, one-click installation, easy access, security by design, auto-updates, history mode, web search capabilities, context awareness, and memory storage. Users can interact with Fluid by typing or using voice commands, making it a versatile and user-friendly AI tool for various tasks.

Meta AI

Meta AI is a research lab dedicated to advancing the field of artificial intelligence. Our mission is to build foundational AI technologies that will solve some of the world's biggest challenges, such as climate change, disease, and poverty.

Meta AI

Meta AI is an advanced artificial intelligence tool that leverages machine learning algorithms to provide data analytics and predictive insights for businesses. It offers a user-friendly interface for users to easily upload and analyze their data, helping them make informed decisions and optimize their operations. With Meta AI, users can uncover hidden patterns in their data, generate accurate forecasts, and gain valuable business intelligence to stay ahead of the competition.

Resha

The website Resha offers a comprehensive collection of artificial intelligence and software tools in one place. Users can explore various categories such as artificial intelligence, coding, art, audio editing, e-commerce, developer tools, email assistants, search engine optimization tools, social media marketing, storytelling, design assistants, image editing, logo creation, data tables, SQL codes, music, text-to-speech conversion, voice cloning, video creation, video editing, 3D video creation, customer service support tools, educational tools, fashion, finance management, human resources management, legal assistance, presentations, productivity management, real estate management, sales management, startup tools, scheduling, fitness, entertainment tools, games, gift ideas, healthcare, memory, religion, research, and auditing.

ImageBind

ImageBind by Meta AI is a groundbreaking AI tool that revolutionizes the field of computer vision by introducing a new way to 'link' AI across multiple senses. It is the first AI model capable of binding data from six different modalities simultaneously, including images, video, audio, text, depth, thermal, and inertial measurement units (IMUs). By recognizing relationships between these modalities, ImageBind enables machines to analyze various forms of information together, advancing AI capabilities significantly.

Audiobox

Audiobox is an AI tool developed by Meta for audio generation. It allows users to create custom audio content by generating voices and sound effects using voice inputs and natural language text prompts. The tool includes various models such as Audiobox Speech and Audiobox Sound, all built upon the shared self-supervised model Audiobox SSL. Audiobox aims to make AI safe and accessible for everyone by providing a platform for creative audio storytelling and research in the field of audio generation.

MLflow

MLflow is an open source platform for managing the end-to-end machine learning (ML) lifecycle, including tracking experiments, packaging models, deploying models, and managing model registries. It provides a unified platform for both traditional ML and generative AI applications.

ChatGPT

ChatGPT is a leading Chinese learning website that offers a comprehensive AI learning experience. It provides tutorials on ChatGPT, GPTs, and AI applications, guiding users from basic principles to advanced usage. The platform also offers ChatGPT Prompt words for various professions and life scenarios, inspiring creativity and productivity. Additionally, MidJourney tutorials focus on AI drawing, particularly suitable for beginners. With AI tools like AI Reading Assistant and GPT Finder, ChatGPT aims to enhance learning, work efficiency, and business success.

llama-recipes

The llama-recipes repository provides a scalable library for fine-tuning Llama 2, along with example scripts and notebooks to quickly get started with using the Llama 2 models in a variety of use-cases, including fine-tuning for domain adaptation and building LLM-based applications with Llama 2 and other tools in the LLM ecosystem. The examples here showcase how to run Llama 2 locally, in the cloud, and on-prem.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

AITreasureBox

AITreasureBox is a comprehensive collection of AI tools and resources designed to simplify and accelerate the development of AI projects. It provides a wide range of pre-trained models, datasets, and utilities that can be easily integrated into various AI applications. With AITreasureBox, developers can quickly prototype, test, and deploy AI solutions without having to build everything from scratch. Whether you are working on computer vision, natural language processing, or reinforcement learning projects, AITreasureBox has something to offer for everyone. The repository is regularly updated with new tools and resources to keep up with the latest advancements in the field of artificial intelligence.

litellm

LiteLLM is a tool that allows you to call all LLM APIs using the OpenAI format. This includes Bedrock, Huggingface, VertexAI, TogetherAI, Azure, OpenAI, and more. LiteLLM manages translating inputs to provider's `completion`, `embedding`, and `image_generation` endpoints, providing consistent output, and retry/fallback logic across multiple deployments. It also supports setting budgets and rate limits per project, api key, and model.

chatgpt-universe

ChatGPT is a large language model that can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a conversational way. It is trained on a massive amount of text data, and it is able to understand and respond to a wide range of natural language prompts. Here are 5 jobs suitable for this tool, in lowercase letters: 1. content writer 2. chatbot assistant 3. language translator 4. creative writer 5. researcher

llms-txt-hub

The llms.txt hub is a centralized repository for llms.txt implementations and resources, facilitating interactions between LLM-powered tools and services with documentation and codebases. It standardizes documentation access, enhances AI model interpretation, improves AI response accuracy, and sets boundaries for AI content interaction across various projects and platforms.

llama-cookbook

The Llama Cookbook is the official guide for building with Llama Models, providing resources for inference, fine-tuning, and end-to-end use-cases of Llama Text and Vision models. The repository includes popular community approaches, use-cases, and recipes for working with Llama models. It covers topics such as multimodal inference, inferencing using Llama Guard, and specific tasks like Email Agent and Text to SQL. The structure includes sections for 3P Integrations, End to End Use Cases, Getting Started guides, and the source code for the original llama-recipes library.

ai-clone-whatsapp

This repository provides a tool to create an AI chatbot clone of yourself using your WhatsApp chats as training data. It utilizes the Torchtune library for finetuning and inference. The code includes preprocessing of WhatsApp chats, finetuning models, and chatting with the AI clone via a command-line interface. Supported models are Llama3-8B-Instruct and Mistral-7B-Instruct-v0.2. Hardware requirements include approximately 16 GB vRAM for QLoRa Llama3 finetuning with a 4k context length. The repository addresses common issues like adjusting parameters for training and preprocessing non-English chats.

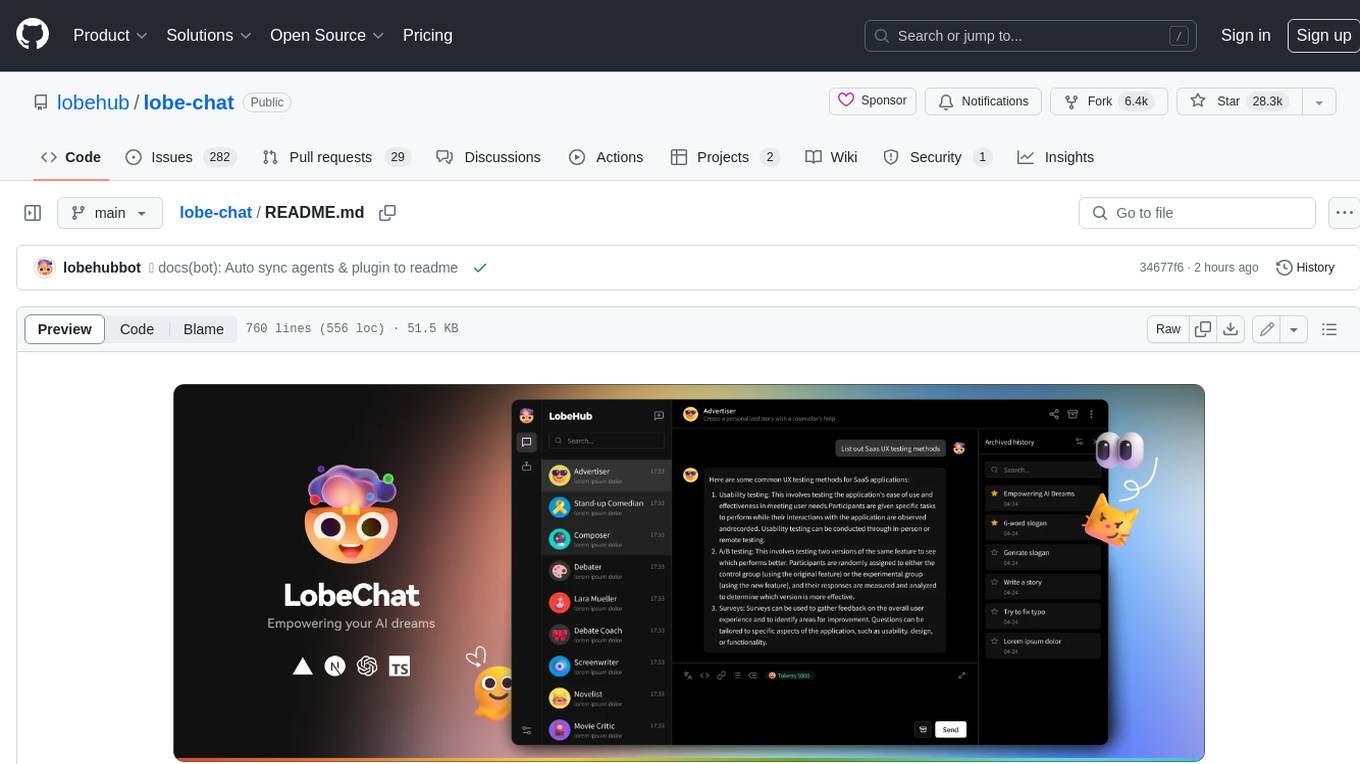

lobe-chat

Lobe Chat is an open-source, modern-design ChatGPT/LLMs UI/Framework. Supports speech-synthesis, multi-modal, and extensible ([function call][docs-functionc-call]) plugin system. One-click **FREE** deployment of your private OpenAI ChatGPT/Claude/Gemini/Groq/Ollama chat application.

Sentient

Sentient is a personal, private, and interactive AI companion developed by Existence. The project aims to build a completely private AI companion that is deeply personalized and context-aware of the user. It utilizes automation and privacy to create a true companion for humans. The tool is designed to remember information about the user and use it to respond to queries and perform various actions. Sentient features a local and private environment, MBTI personality test, integrations with LinkedIn, Reddit, and more, self-managed graph memory, web search capabilities, multi-chat functionality, and auto-updates for the app. The project is built using technologies like ElectronJS, Next.js, TailwindCSS, FastAPI, Neo4j, and various APIs.

bookmark-summary

The 'bookmark-summary' repository reads bookmarks from 'bookmark-collection', extracts text content using Jina Reader, and then summarizes the text using LLM. The detailed implementation can be found in 'process_changes.py'. It needs to be used together with the Github Action in 'bookmark-collection'.

PurpleLlama

Purple Llama is an umbrella project that aims to provide tools and evaluations to support responsible development and usage of generative AI models. It encompasses components for cybersecurity and input/output safeguards, with plans to expand in the future. The project emphasizes a collaborative approach, borrowing the concept of purple teaming from cybersecurity, to address potential risks and challenges posed by generative AI. Components within Purple Llama are licensed permissively to foster community collaboration and standardize the development of trust and safety tools for generative AI.

Chinese-LLaMA-Alpaca-3

Chinese-LLaMA-Alpaca-3 is a project based on Meta's latest release of the new generation open-source large model Llama-3. It is the third phase of the Chinese-LLaMA-Alpaca open-source large model series projects (Phase 1, Phase 2). This project open-sources the Chinese Llama-3 base model and the Chinese Llama-3-Instruct instruction fine-tuned large model. These models incrementally pre-train with a large amount of Chinese data on the basis of the original Llama-3 and further fine-tune using selected instruction data, enhancing Chinese basic semantics and instruction understanding capabilities. Compared to the second-generation related models, significant performance improvements have been achieved.

Chinese-LLaMA-Alpaca-2

Chinese-LLaMA-Alpaca-2 is a large Chinese language model developed by Meta AI. It is based on the Llama-2 model and has been further trained on a large dataset of Chinese text. Chinese-LLaMA-Alpaca-2 can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. Here are some of the key features of Chinese-LLaMA-Alpaca-2: * It is the largest Chinese language model ever trained, with 13 billion parameters. * It is trained on a massive dataset of Chinese text, including books, news articles, and social media posts. * It can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. * It is open-source and available for anyone to use. Chinese-LLaMA-Alpaca-2 is a powerful tool that can be used to improve the performance of a wide range of natural language processing tasks. It is a valuable resource for researchers and developers working in the field of artificial intelligence.

distributed-llama

Distributed Llama is a tool that allows you to run large language models (LLMs) on weak devices or make powerful devices even more powerful by distributing the workload and dividing the RAM usage. It uses TCP sockets to synchronize the state of the neural network, and you can easily configure your AI cluster by using a home router. Distributed Llama supports models such as Llama 2 (7B, 13B, 70B) chat and non-chat versions, Llama 3, and Grok-1 (314B).